What's the carbon footprint of using ChatGPT or Gemini? [August 2025 update]

A new study from Google suggests its Gemini LLM uses around 0.24 Wh per text query. That's the same energy as using a microwave for one second.

Summary:

My main conclusion is no different from my initial post: individual usage of ChatGPT and other LLMs for most people is a small part of their carbon and energy footprint.

The energy use “per query” is possibly 10 times lower than estimated in the previous article. Google estimates that its median text query uses around 33-times less energy than 12 months ago. So, this kind of stacks up.

Google says that its median text query uses around 0.24 Wh of electricity. That’s a tiny amount: equivalent to microwaving for one second, or running a fridge for 6 seconds.

Image generation (not from Gemini — they didn’t estimate this) is possibly comparable to a standard text response? This surprised me, and I’m still a bit sceptical without more data.

Generating video seems to be energy-hungry (although we don’t have many estimates for this). I’ve never made a video, and I’m not sure I know anyone who does. But the advice that “LLMs are a small part of your environmental footprint” might not apply if you’re generating a lot of video. In that case, it would be substantial.

The fact that AI chatbots are a small part of most individual footprints does not mean I don’t think AI and data centres as a whole are not a problem for energy use and carbon emissions. I think that, particularly at a local level, managing load growth will be a challenge.

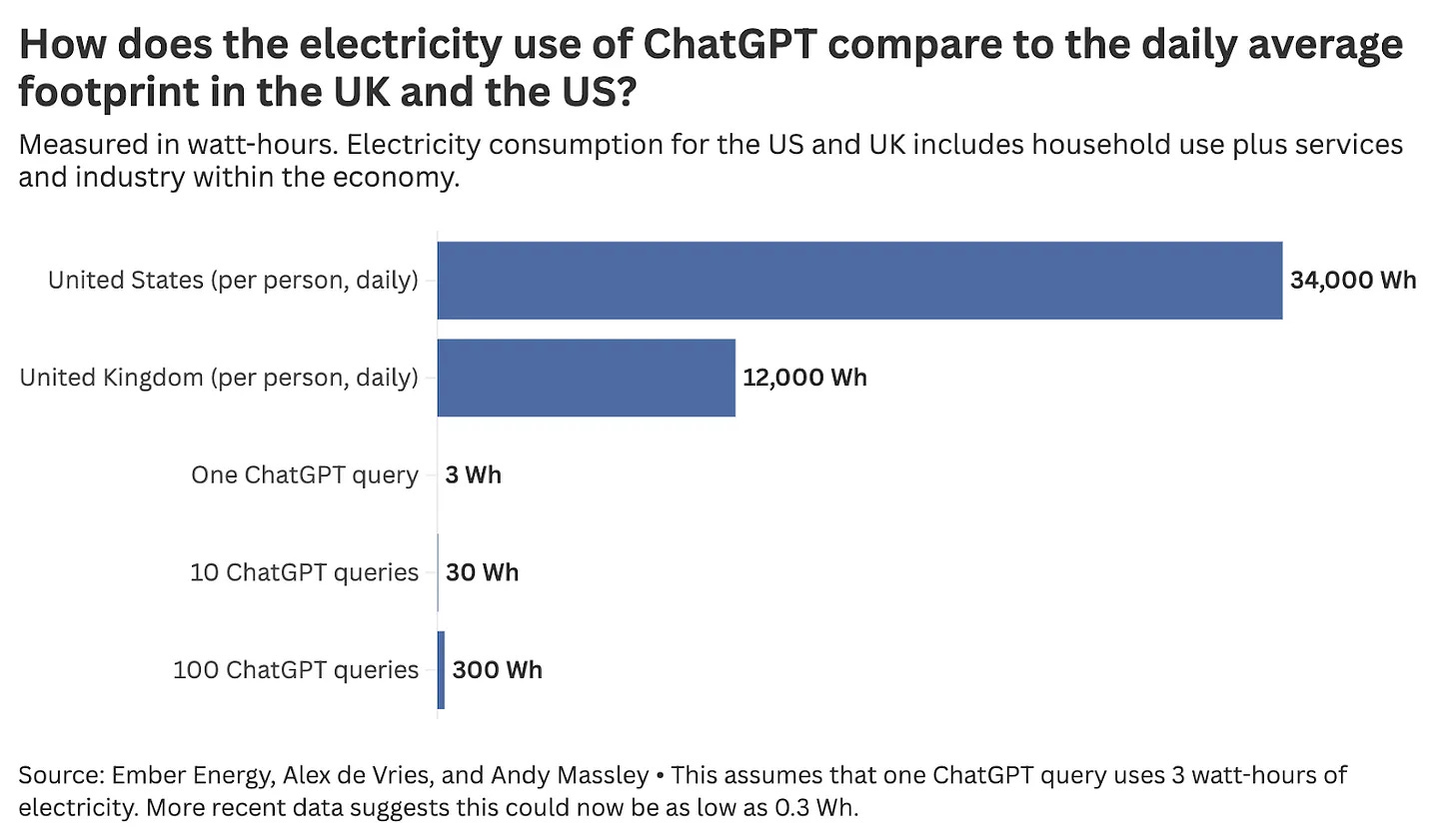

In May, I wrote an article looking at estimates of the energy use and carbon emissions of a ChatGPT query.

There were a few key takeaways from that article.

First, for most people, even moderate use of ChatGPT is a very small part of their footprint.

Second, tech companies are not very transparent about these numbers (that’s an understatement), so these estimates were rough and a “best estimate” based on what people could cobble together based on “reasonable” references that are out there.

Third, as a result, more recent estimates suggested that the assumptions I relied on (h/t to Andy Masley’s work on this) — that one standard query used 3 watt-hours (Wh) of electricity — were possibly an order of magnitude too high. In this case, I was happy to be conservative and overestimate the energy use.

I said in that article that it wouldn’t be the last time I wrote on the topic, because hopefully, better or new estimates would appear. So here’s an update. The main thing I want to cover is new, more transparent estimates from Google on its Gemini LLM. But first, here’s a short round-up of other announcements or interesting links.

Sam Altman, CEO of OpenAI, published a blog post in June covering a range of AI updates. In it, he casually mentioned that a standard text query uses 0.34 Wh of electricity. Remember, that’s close to the updated estimate that EpochAI came up with (0.3 Wh), and is ten times lower than what I had assumed in the last post. Altman did not share any maths or methodology behind this, so I wouldn’t index much on it. I don’t know whether to see this as useful confirmation that EpochAI and Altman landed on a similar estimate, or whether Altman wanted to publish a number, found EpochAI’s new analysis, and relied on that. Without more information, I would take it with a pinch of salt. But it is one additional data point, at least.

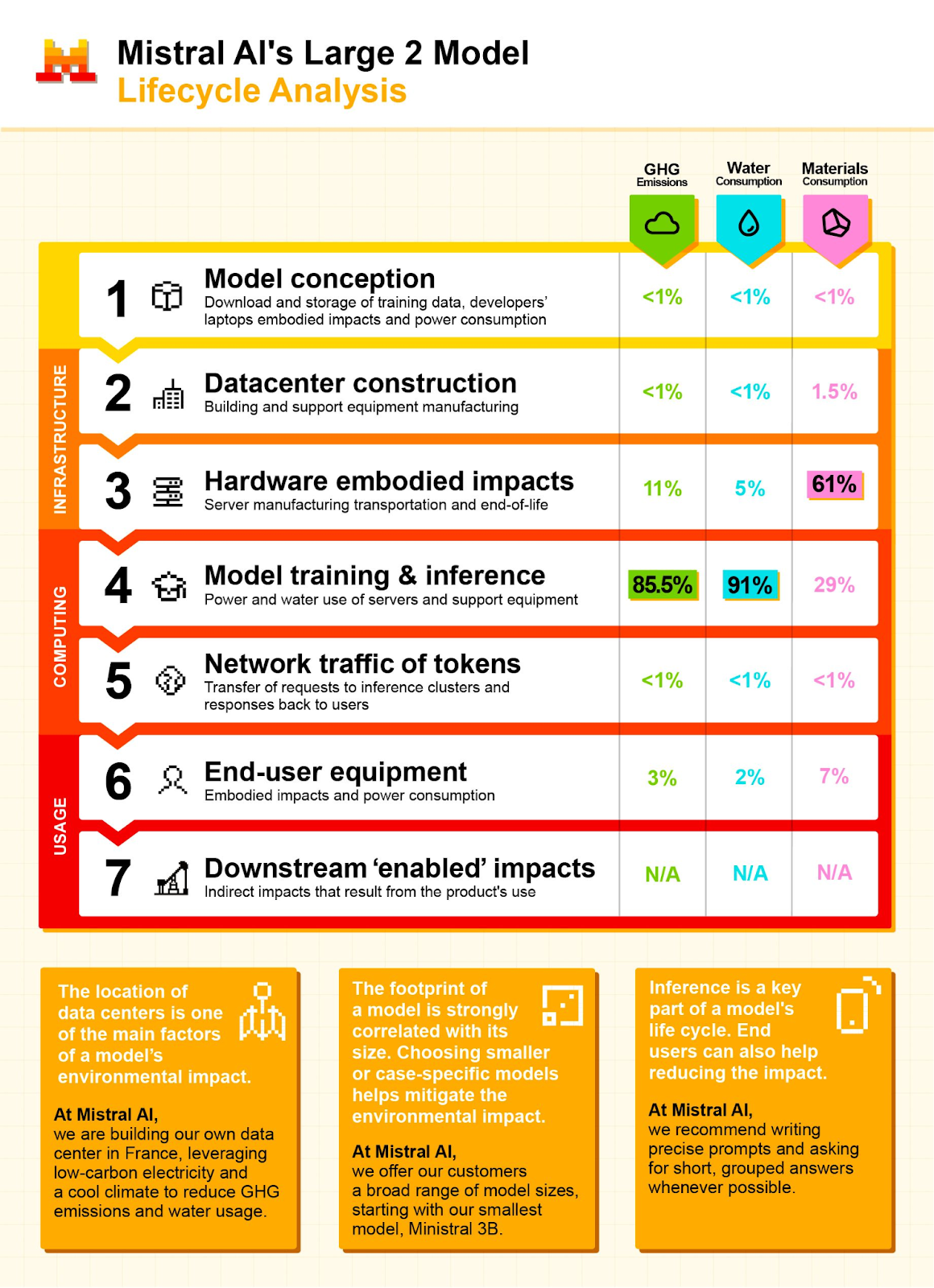

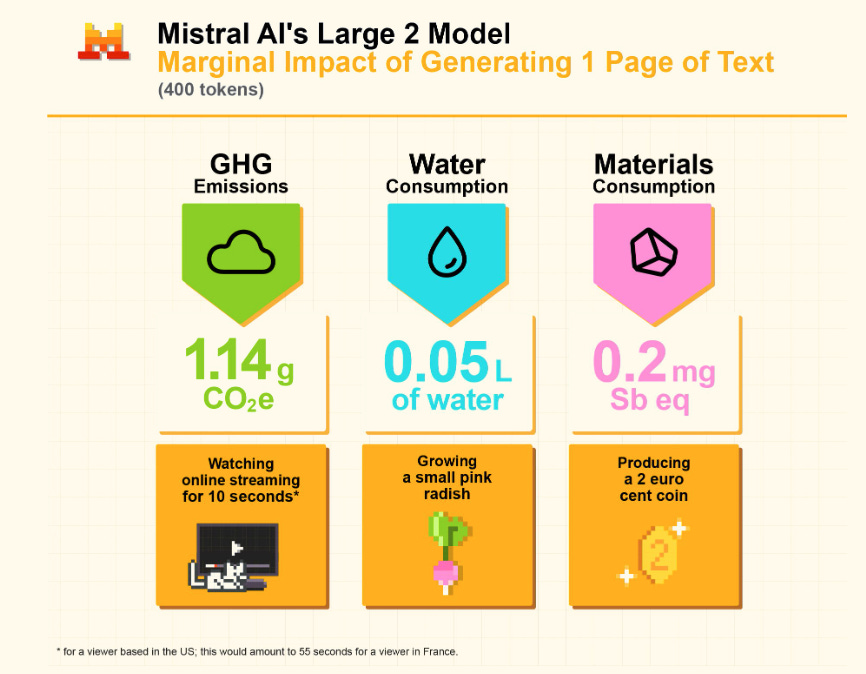

Mistral AI, another AI company, conducted an environmental analysis of its LLMs. It used a life-cycle assessment, conducted by external consultancy agencies. While the methodology was not that transparent or detailed, it did provide breakdowns of where in the process, impacts came from (I just wish they’d split out inference from training). Overall, the impacts were low: just 1 gram of CO2 per page of text generated (which is a fairly long text response). That’s very low.

This article in MIT Technology Review — We did the math on AI’s energy footprint. Here’s the story you haven’t heard — was published in May, and was a good overview of many of the complexities. Some stand-out numbers: it quoted an estimate of around 0.93 Wh (let’s call it 1 Wh) for an average Llama text query response. Between 0.3 and 1.2 Wh for generating an image (which was less than I had expected). And a lot more for generating video using OpenAI’s Sora: nearly 1 kWh to generate a 5-second video. I have some scepticism that it’s so energy-intensive, but without other estimates, I would still assume that video uses a lot of energy for now (and then update later that turns out not to be the case).

Again, I wouldn’t take any of these single estimates as the ultimate number, but there does appear to be some sort of convergence on the magnitude of these LLM searches. The 3 Wh estimate in my previous article was probably too high, and something a little lower — possibly as low as 0.3 Wh — seems possible.

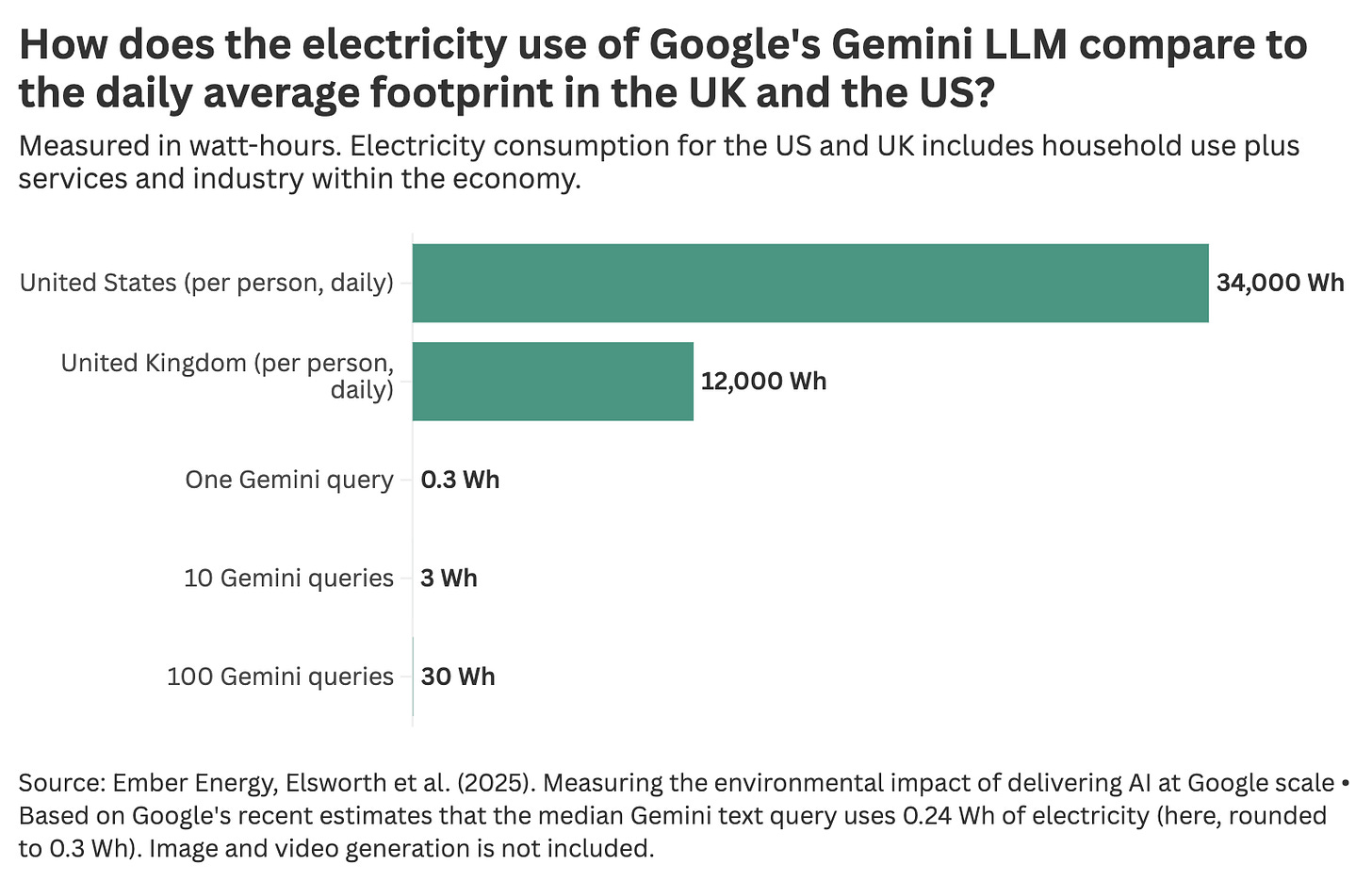

Google's new assessment, published on its Gemini model, further confirms this. It estimates that the median text query over the past 12 months used 0.24 Wh and emitted just 0.03 grams of CO2. Let’s take a look at the results.

Google estimates that its Gemini model uses just 0.24 Wh per standard text query: the same as watching television for 9 seconds

Google is the first big tech company to produce a detailed report on the per-query impact of its LLM, and that’s extremely welcome.1 Even if people have critiques of the methodology, the fact that it’s laid out in some detail makes it possible to challenge more specifically. It’s impossible to properly engage when it’s just the CEO giving a number without any context.

Their main result is that the median text query using Gemini uses 0.24 watt-hours of electricity. Here, “median text query” was the median length and depth of response across all of its text searches in May 2025. So this spans from the incredibly short, all the way up to Deep Research searches.2 Unfortunately we don’t have further information on the distribution, so it’s hard to really know what length of response this is. Obviously longer responses will use more energy.

What’s interesting is that they also report large efficiency gains over the last year. They estimate that 12 months ago, the per-query energy use was 33 times higher. That means the standard text prompt was using 9 Wh. That’s the same order of magnitude as the 3 Wh estimate I referenced in the previous article. Hence that seems to be a reasonable estimate for last year, but not anymore. The energy use is likely more than 10-times lower due to improvements in efficiency.

Let me again put this 0.24 Wh (or let’s round to 0.3 Wh, which conveniently aligns with EpochAI’s updated estimate for ChatGPT) into perspective for an individual.3

0.24 Wh is the equivalent of watching television for 9 seconds. So if you did 10 prompts, that would be one-and-a-half minutes of TV. 100 prompts would be 15 minutes.

So Gemini is small compared to TV-watching, but importantly, TV-watching is also small compared to most other uses of energy.

Here are a few other activities that use around 0.24 Wh:

Microwaving for 1 second

Running a laptop for 17 seconds

Running a fridge for 6 seconds

Charging a mobile phone to 2%

In my previous article, I compared the average electricity consumption of Brits and Americans, using the estimate of 3 Wh per query.

Here’s an update if we assume the 0.24 Wh (or 0.3 Wh when rounded) figure.

If the average Brit were to do 10 queries per day, it would equate to around 0.03% of daily electricity use. 100 queries would be 0.3%. For the average American, it would be 0.1%.

Again, unless you’re an extreme power user, asking AI questions every day is still a rounding error on your total electricity footprint.

Google’s Gemini carbon footprint estimates are incredibly low

The other key number in Google’s report is that the median text query generates 0.03 grams of CO2e.

Now this is extremely small — I was surprised at how small — and far lower than previous estimates that I’ve seen. In the previous article, I used a rough number of 2 to 3 grams based on existing estimates. How is it possible that Google’s figure would be 100 times lower?

For one thing, Google reports that its carbon emissions per query have dropped 44-fold in the last 12 months. A big part of this is the drop in energy use (33-fold), but also because it assumes a much lower figure for the carbon intensity of electricity.4

If we use this reported 44-fold drop, we can estimate that a year ago, its emissions would have been 1.32 grams of CO2e. That’s pretty close to the previous estimates of “a few grams”.

Another factor is how much training is factored into emissions estimates. Google is very clear that these estimates — for energy and emissions — are only for inference. “Inference” is basically running an already-trained model to generate an output. It’s what you’re doing every time you ask Gemini or ChatGPT a question.

That means Google’s estimates are potentially more limited than some others that do include training. For example, the Mistral AI life-cycle analysis we looked at earlier appeared to include both training and inference (again, the report wasn’t that heavy on methodology, so it’s hard to know specifically how this was accounted for). It had a figure of just over 1 gram CO2e per page of text.

Whether we take Google’s 0.03 grams or higher estimates of a few grams, the main conclusion does not change. That might seem crazy given that the numbers are orders of magnitude different. But the point is that these emissions are small compared to most peoples’ current footprint. When I assumed the figure was 2 grams, I calculated that the average Brit doing 10 searches a day would increase their carbon footprint by 0.17%. For the average American, 0.07%.

If we were to take Google’s figure of 0.03 grams, then add a zero or two more after the decimal point. Rather than just being very low, the carbon emissions of using AI chatbots would be incredibly low.

Some final points and caveats

Based on Google’s analysis, I’m not going to automatically adopt “0.24 Wh” as the number for a text query. Google’s new paper is very welcome, but it does exclude a few things that they are transparent about5:

External networking. That is the energy used by your own internet service provider before it “gets to” Google. They don’t include this because it’s not within their control. But it’s also true that the energy use here is tiny compared to the energy used for compute. It would have negligible impact on the final numbers.

End user devices. They don’t include energy used by your own hardware: computer, monitor, whatever you’re using.

Training. I already mentioned this a few times. They are focused on the marginal energy used per AI prompt. They are not looking at the energy use of training.

Energy use is still going to vary quite a bit based on the length of query/response (see the chart below), and the efficiency of the specific LLM, so I still think the assumption that energy use for a typical query is somewhere between 0.3Wh and 3Wh is fine for most people.

I hope that other tech companies now follow suit, and publish their own estimates — in a more transparent way — so we can understand how these models compare, and how they’re changing over time (which they will).

least it's the first that I'm aware of.

I did ask about the footprint of long, Deep Research queries. But they did not have these specific numbers: only the median across all text queries.

Obviously Deep Research responses will use more energy, but I don't know how much more.

I am aware that you’d usually round 0.24Wh down to 0.2, rather than up to 0.3 Wh. I’m rounding up, since 0.3 Wh is a number I’ve previously referenced a lot, so it keeps things better-aligned, without too many different numbers flying around.

Google has a number of “Power Purchase Agreements” (PPAs), where it agrees to buy electricity from a clean energy project at a fixed price. Now that does not necessarily mean that this electricity is delivered to power Google’s data centres, but the idea is that Google is providing funding and demand for the clean energy projects, reducing the carbon intensity of electricity across the grid.

It then includes these PPAs — the purchased clean electricity — in its carbon intensity of electricity figure. This can mean that the assumed carbon emissions per unit of electricity is lower than the answer you’d get if you just used the state-level or national-level average.

It’s also worth noting that almost all of the estimates we’ve looked at are based on what we call “bottom-up” models. They try to measure or estimate the power draw used through hardware and data centres, and multiply by the time spent per inference (or query). There are alternative “top-down” methods where you first try to estimate the total energy use of data centres or hardware, then try to allocate that down to specific activities (like a single query).

The ideal scenario is that top-down and bottom-up estimates would match. In reality, top-down estimates are often a big higher, suggesting that some of these bottom-up approaches have limitations around what’s included.

Thanks for the analysis Hannah. Good research. Even though energy use per query is very low and most likely will get lower, we need to consider the rebound effect. By making AI efficient, easy, and cheap, more and more systems will rely on it. Many already do. As more users and back-end systems use it, overall energy requirements will increase. Just as the first electric lightbulbs were horribly inefficient, we use much more electricity for electric LEDs now since they have become efficient, cheap, and ubiquitous. Human civilization tends to increase in complexity and energy intensity over time, even if any individual technology gets more efficient.

Thank you so much for following up with this. Your reports are valuable when discussing this, and there's a lot of heat, especially in climate-conscious circles, around the subject.

That said, the pinch of salt I would take when processing Google's numbers is huge. They have been notoriously and consistently nebulous about their emissions, and wilfully conflate Scope 1, 2, 3 etc.

The real question is not what the carbon footprint of "me" using ChatGPT is, it's what is the carbon footprint *increase* of this huge surge towards AI. As ever, Jevons keeps looking over our shoulder here.